Quanhom Technology Co., LTD is a company engaged in the area of development and production of thermal infrared optics. The high precision product range includes infrared lenses assemblies of SWIR/MWIR/LWIR , eyepieces, infrared lens elements, etc.

Home / All

Find Your Product

Whatever your requirements, our know-how, expert team, and technology are all there, ready to provide you with the precise, fully-tailored solution you need.

-

Please send your message to us

- Name

- Tel

- *Title

- *Content

Infrared optics

294 products found

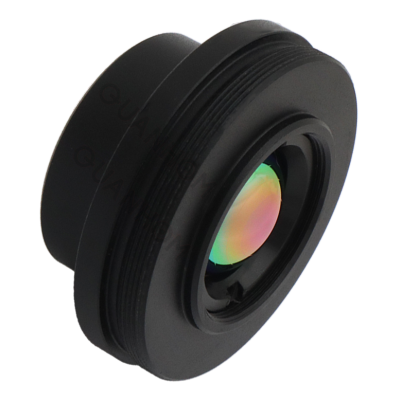

Dual FOV Infrared Lens,can be applied to Security and Surveillance.

Fixed Athermalized IR Lens 4mm f/1.1,can be applied to Security and Surveillance.

Fixed Athermalized IR Lens 4.3mm f/1.2,can be applied to Security and Surveillance.

Fixed LWIR Lens 6mm f/1.25,can be applied to Security & Surveillance.

Fixed Athermalized LWIR Lens 5.7mm f/1.0,can be applied to Enhanced Vision.

Fixed Athermalized IR Lens 6.7mm f/1.0,can be applied to Enhanced Vision.

Fixed LWIR Lens 6.8mm f/1.0,can be applied to Security & Surveillance.

Athermalized Infrared Lens | LWIR Lens 7.5mm f/1.2,can be applied to Enhanced Vision.

Athermalized Infrared Lens | LWIR Lens 7.5mm f/1.2,can be applied to Enhanced Vision.

Fixed LWIR Lens 7.5mm f/1.0,can be applied toEnhanced Vision.

Fixed LWIR Lens,can be applied to Thermal Imaging Body Temperature Screening

Athermalized Infrared Lens | LWIR Lens 8.5mm f/1.0,can be applied to Enhanced Vision.

Fixed Athermalized IR Lens,can be applied to Security and Surveillance.

Fixed Athermalized LWIR Lens,Small focal length,can be applied to airborne, Security,Surveillance etc.

Fixed LWIR Lens 10.5mm f/1.0,can be applied to Enhanced Vision

Fixed Athermalized IR Lens 13.4mm f/1.0,can be applied to Hand-held Thermal Imaging Devices.

Fixed Athermalized IR Lens 12.3mm f/1.0,can be applied to Security and Surveillance.

Athermalized Infrared Lens | LWIR Lens 13mm f/1.0,can be applied to Enhanced Vision.

Fixed Athermalized LWIR Lens,can be applied to Security and Surveillance.

Quanhom Technology Co., LTD is a company engaged in the area of development and production of thermal infrared optics. The high precision product range includes infrared lenses assemblies of SWIR/MWIR/LWIR , eyepieces, infrared lens elements, etc.